Not convinced?

Check out these other resources to learn more about AI extinction risk:

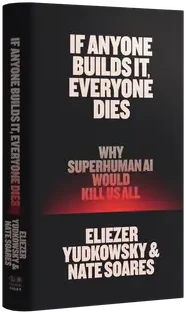

The New York Times bestselling book is the best resource laying out the theoretical case for AI x-risk.

If you prefer a video check out this interview with Yudkowsky, or this audio interview with Soares, or this quick primer by science youtuber Hank Green.

For answers to frequently asked questions, try https://whycare.aisgf.us/.